I’ve started work on a walkthrough of Chia farming from download to payout. It’s turned out to be a bit more challenging than I thought, but I’m still plowing through.

This video (below) is NOT the walkthrough. It is a quick overview, with an hour of sped-up node syncing. Three hours to get the last four weeks synced… not very fun but it makes a point, and gets some content up on the Andromedary Instinct YouTube channel.

Looking at the snapshot sync process

In March/April 2023, Chia Network posted a snapshot of the blockchain database as of March 31, 2023. They updated it in July to the June 30 snapshot.

Why does this matter? Well, syncing the blockchain to your node is necessary to plot and do transactions (there may be workarounds for both, but the usual path is to sync a full node and then start farming). That’s a pretty slow process.

On a very well-tuned farmer with great network and I/O, I suspect you can get a node synced in a day or so. Last time I synced from scratch on a Raspberry Pi, I believe it was 11 days, and would be longer today. The machine in this video took three hours to sync 4 weeks of activity, and that skips over the dust storm phase of the blockchain (which effectively knocked a lot of slower nodes offline until Chia Network did some software enhancements to better deal with dust storms).

By using BitTorrent to grab a snapshot (about 65GB compressed, 125GB uncompressed) in a few hours, you skip closer to the front of the line in syncing status. You can then “resume” syncing from the start of July and even on a suboptimal machine like my test machine, you’ll be done in under a day.

This video also shares one of the better guides to moving your database directory. In my case, the boot drive it was originally syncing to is a 256GB drive, and it would eventually fill up the drive. I moved it off to a second SSD so that the boot drive won’t degrade.

Building that system, and what I’d do differently

I realized as I started plotting (heh) the video flow that it would be easier to start with a fresh computer. I had another use for an Alder Lake-based machine coming up, and I managed to get a complete system new in open box for $250 locally, so I went for it. As configured, it cost me less than $500, but if I were building it for long term Chia use without “free” review stock from Amazon Vine, I’d make a few other changes.

Bill of Materials, as built ($537 with caveats)

- HP Pavilion TP01-3016, i5-12400, 12gb RAM – $250 on Facebook Marketplace with smaller NVMe boot drive

- Samsung 860 QVO 1TB SATA SSD – $33 + tax/shipping on eBay this summer

- KingSpec DDR4 32GB 3200MHz – “free” ($97.99 on Amazon)

- FIDECO external SATA enclosure – “free” ($26.99) on Amazon

- 8TB SATA hard drive – like https://amzn.to/44MXXUu – $129 on ebay in 2020

If I were building it today without existing parts stock ($474):

- HP Pavilion TP01-3016, i5-12400, 12gb RAM – – $250 on Facebook Marketplace

- Replace boot drive with Solidigm P41 Plus 2TB NVMe – $64.99 on Amazon

- Replace 12GB included RAM with Corsair Vengeance LPX DDR4 32GB 3200MHz – $64.99 on Amazon (or similar)

- FIDECO external SATA enclosure – $26.99 on Amazon (or similar)

- 8TB Hitachi Ultrastar He8 – $67.50 on Amazon (or a larger drive)

This HP desktop system maxes at 32GB RAM, so plotting may be limited even with a GPU. However, for storage it’s pretty good, with two 5GBit USB-A ports, two 10GBit USB-A ports, and one 10GBit USB-C port on the front (in addition to two USB 2.0 ports on the back). The board supports two SATA drives with power connectors plus the NVMe. And it’s not a bad CPU – 6 P-Cores with hyperthreading.

Once I finish the video, I’ll be swapping out the boot drive for a better one to install Ubuntu and Arweave on. I still prefer running my node databases on moderate to good performance NVMe over an economy SATA drive whenever possible.

Some other options for plotting and farming systems

This HP desktop build met my primary criterion – ability to get a system cheap and quick and local to set up Chia from the ground up. But as noted above, it’s not perfect, and has definite limits (like one x1 and one x16 PCIe slot, only two SATA drives, 32GB max RAM).

Some other options that I can recommend would be:

- Dell T7910 Prebuilt on Amazon – $1088

I have two T7910s, and even though they’re several years old, they have a lot of expandability and performance while being fairly manageable in size, shape, noise level, and power draw.

This one has two E5-2695v4 processors and 512GB RAM included. You can branch out into compressed plots and/or RAM-based plotting, both of which will accelerate your plotting. With several x16 PCIe slots and either 4 3.5″ or 8 2.5″ drives (not sure which is the case on this system), you can expand with GPUs, storage adapters, internal SAS/SATA drives, or even faster networking.

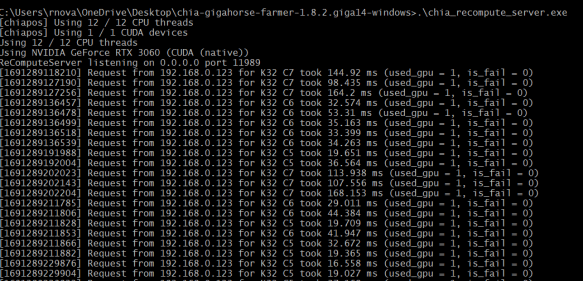

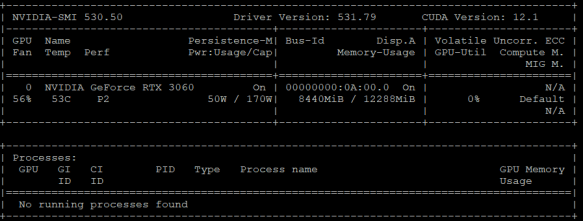

My primary plotter is still a T7910 with two E5-2650Lv4 (14c28t) processors and 128GB RAM, plus a RTX3060 GPU

If you’re looking for a slightly different config, there are a number of options with different mix of CPU, RAM, and storage at the Amazon Renewed store.

Another alternative is to go a bit more modern with a T7920, which uses first gen Xeon Scalable processor instead of the Xeon E5 v4 line. There are a fair number of first gen Xeon Scalable gold processors for double digit prices on eBay in case you start with a silver or bronze level processor.

- Mini PCs, primarily for farming or uncompressed plotting

There are a lot of NUCs and NUC-Class systems out there. I’ve got systems from ACEMAGICIAN, GEEKOM, and Beelink in the home lab/studio, as well as my classic Chia NUC plotter, the NUC10i7FNH from a couple of years ago.

These are not very expandable in terms of interfaces or GPUs, but with Thunderbolt you could use a Thunderbolt Hub to attach additional USB hubs and drives, or a Thunderbolt PCIe enclosure to attach a SAS card or GPU.

At some point, however, if you’re looking at expanding your NUC or mini-PC that much, though, you may want to consider either a NAS or a desktop/workstation/server with SAS enclosures to handle the drives.